About

These blogs are my notes that represent my interpretation of the CS236 course taught by Stefano.

Part 1

Generative Modelling

Generative modelling is a way to model/sample data that looks like something we might know.

I am going to put more weight on the last part as it is important to generate something that makes sense. For instance, a machine learning model that generates ‘qweefejfww’ (random jargon) will not be of any use to us. But a model that generates some quotes like ‘What you sow, you shall reap’ might be quite cool and inspirational to some people at least..

But moving forward from the vague example I mentioned how do I really generate something. Humans can generate by writing or painting something they see but how do you make a computer do something like that.

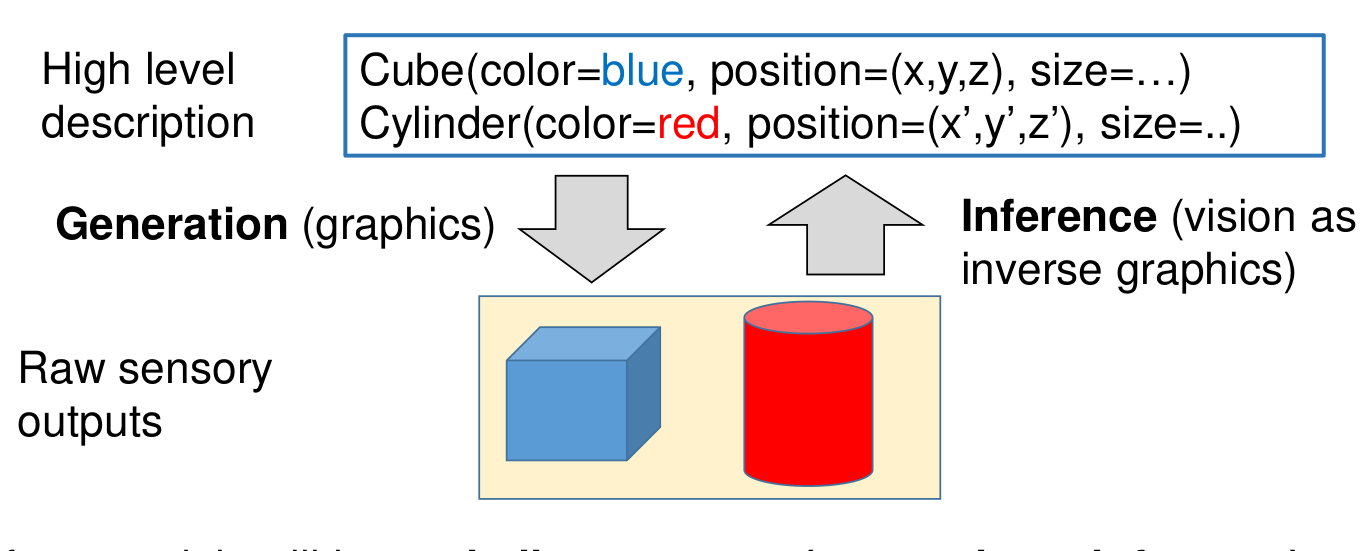

Graphics

This is a snippet I took from the course slides as it seems like a good representation of what generation using computers look like.

The example lets us generate Cubes and Cylinders by specifying the dimensions.

This seems cool. So does this mean we just need to now close our books and say oh hey this problem is already solved.

Well obviously not. Let’s think a bit more what if I wanted this cube that I generated to look like a rubik’s cube. A normal rubik’s cube has 3x3x3 dimensions. Further each face is divided into 9 smaller cubes with normally the face appearing outside having some color.

So if we want to generate a specific configuration of a Rubik’s cube. We would need 144 parameters to effectively assign each smaller cube in this 3x3x3 Rubik’s cube.

Maybe 144 parameters doesn’t sound that bad.

How about generating images? Let’s take a passport size photo first 32x32. It would be approximately 1024 parameters if we want to represent each pixel. And if we want to generate even bigger pictures like something of the dimension 1000x1000. It would be approximately 10^6 parameters.

I hope 10^6 seems too much because it defintely is. But we do have GPUs and deep learning models have like a million parameters so maybe this is not so bad right.

But how do we make our computer learn how to generate an image of a dog or a cat or even a simple digit. In the Cube case it is fairly simple for someone to write a function to generate a cube based on the dimensions specified but an image is unstructured. Even if we specify 10^6 parameters for a 1000*1000 there is no guarantee that the output is going to be a valid image. In this case we just hope that we set the pixels/parameters such that we observe something that looks like an image.

Statistic Generative Models

Assuming that we are a painter not painting artisitic representation of the world but the way it appears. In that case we tend to have an idea of the way an image would look like and so while painting we create the art in a way that would be the most likely representation of the scenery/object we are trying to create.

If the model could learn information on how the data looks like maybe it could achieve what a painter does.

This is the intuition behind using Staistic Generative Models.

A statistical generative model is a probability distribution p(x) that represents an approximate distribution of the actual data distribution. The data distribution p(x) is achieved by using a prior that helps us estimate how similar we might be to the true distrubution. Techniques like maximum likelihood would fit this.

Since p(x) is supposed to represent the true data distribution sampling from this distribution will give us real-life images.

Applications of Statistical Generative Models

Conditional generation.

Outlier detection

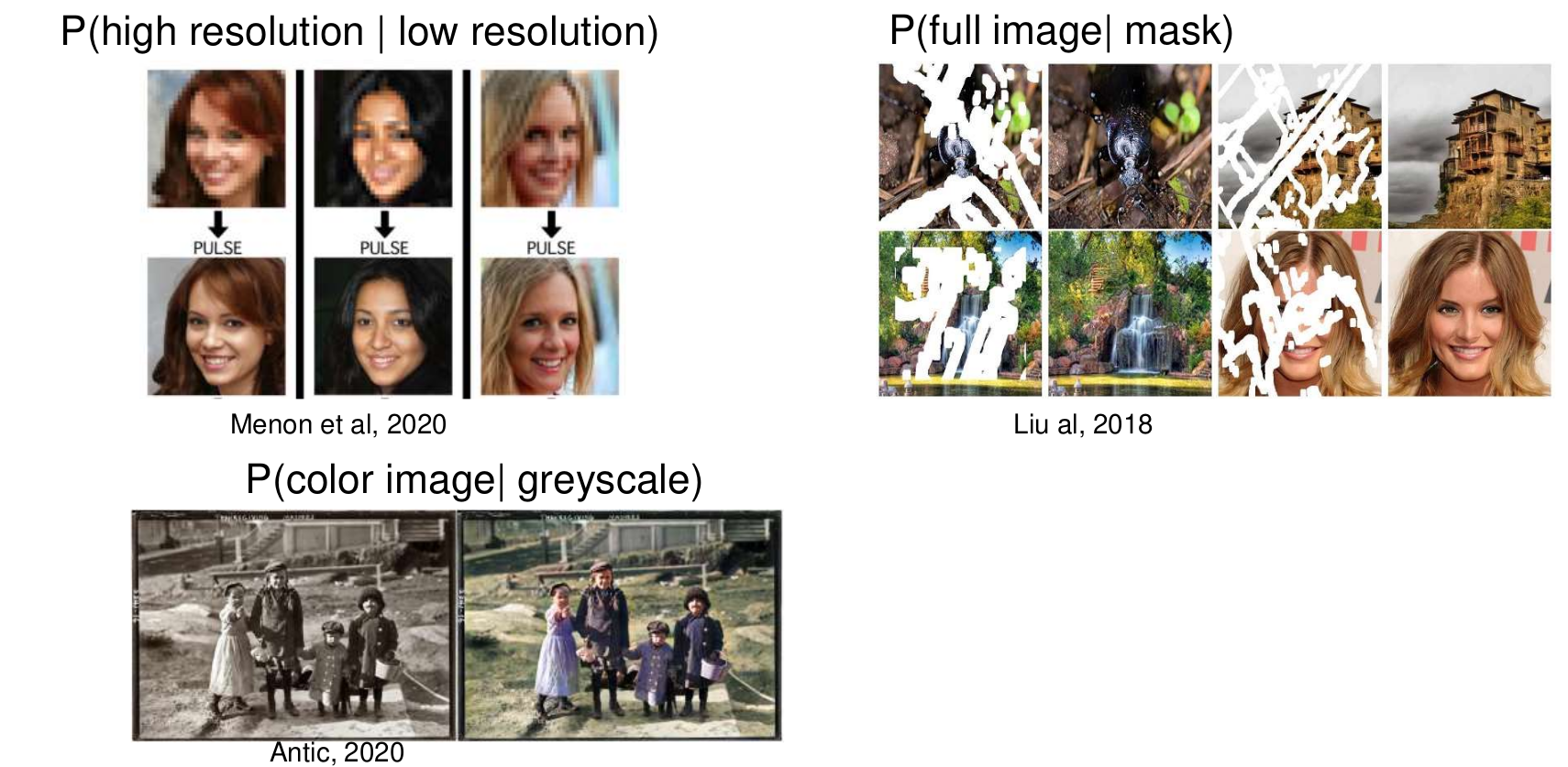

Image to Image translation